Beyond Compliance: Building Trust Through AI Governance and Control

A joint perspective from Velatir and Saidot

When 673 pages of chat logs from Tromsø municipality became public in 2025, they revealed something that should concern every board director and executive: entire sections of an official government report were 100% AI-generated, complete with fabricated research sources, and no human had verified any of it. The project leader's instruction to ChatGPT was chilling in its brevity: "Fill out the rest. No need for further quality control."

This wasn't just a municipal scandal. It was a preview of what happens when organizations rush to adopt AI without the operational frameworks to govern it. As the EU AI Act reshapes corporate accountability and boards face increasing scrutiny over AI oversight, the question is no longer whether to act, but how quickly organizations can implement the dual pillars of responsible AI deployment: operational governance and control.

Why Companies Must Act Now

The regulatory landscape has fundamentally shifted. The EU AI Act entered into force on August 1, 2024, with prohibitions and AI literacy obligations entering into application from February 2, 2025, governance rules and obligations for general-purpose AI models becoming applicable on August 2, 2025. The most significant milestone and full applicability is scheduled for August 2026, when a significant part of high-risk system obligations will become enforced. Organizations operating in or serving the European market can no longer treat AI governance as a future concern.

The Tromsø case demonstrates the reputational and operational risks that emerge when AI is deployed without proper controls. When journalists requested the chat logs under Norway's Freedom of Information Act, the municipality initially refused, claiming they were merely "electronic traces" not subject to transparency laws. The County Governor disagreed, establishing in a landmark ruling that AI chat logs are documents covered by public access laws and must be available for scrutiny. This precedent signals a clear direction: AI interactions are not invisible, and organizations will be held accountable for how AI is used in their operations.

Operational Governance: AI Inventorying and Application of Risk-Based Governance Throughout the AI Lifecycle

Effective AI governance begins with visibility. Organizations cannot manage what they cannot see, and the first requirement of responsible AI deployment is knowing where, when, and how AI is being used across the enterprise. This is where AI mapping capabilities become essential, providing the operational governance layer that enables transparency and risk management.

Saidot's role in operational governance encompasses three critical functions:

Inventory of AI assets: Inventorying AI assets, systems, agents, models and datasets makes them visible and subject to governance. Organizations need a consistent inventory to form the baseline for AI governance. Because AI systems are composed of multiple connected components, governance must operate at the right level of granularity. The EU AI Act requires organizations to detect AI systems and classify them according to their risk level. AI governance platforms support this through automated inventorying and classification based on regulations and internal policies alike.

Risk and compliance automation: Effective governance requires recognizing that AI systems carry different levels of risk and must not be treated the same. To avoid unnecessary governance burdens on AI teams, organizations must implement risk-based rules. A system’s risk posture is shaped by its components, models, data, products, agents, tools, context and use. Automating this process helps consistently capture system risks and assign appropriate controls, while ensuring visibility into control implementation to provide audit-ready evidence.

Trigger-based governance throughout lifecycle: AI systems continuously evolve as models are retrained, data or features change or new components are added. Many enterprise AI systems now rely on third-party models, APIs and plug-ins that may update without notice. Trigger-based governance responds to these events by automatically initiating risk reassessment, control revalidation or compliance workflows. This lifecycle-aware approach ensures continuous compliance, prevents hidden risk accumulation and increases transparency across the AI supply chain.

Operational Control: Detecting, Stopping and Escalating AI Actions

Detection tells you what happened. Control determines what should happen. While visibility is essential, it is insufficient without the operational controls that enforce responsible AI use in real time. This is where Human-in-the-Loop (HITL) systems like Velatir become critical, providing the control layer that prevents AI incidents before they occur.

Velatir's role in operational control addresses three fundamental requirements:

Real-time enforcement of governance policies. Organizations need systems that don't just document AI usage but actively enforce rules about how AI can be used. This means configuring mandatory checkpoints where AI outputs cannot advance without human review, creating approval workflows that match organizational risk tolerance, and ensuring that policies are technically enforced. In the Tromsø scenario, a properly configured HITL system would have prevented the project leader from instructing ChatGPT to proceed "without further quality control," requiring instead that qualified personnel review and approve AI-generated content before incorporation into official documents.

Structured approval workflows that embed expertise at critical decision points. Article 14 of the EU AI Act requires that high-risk AI systems be designed and developed with appropriate human-machine interface tools so they can be effectively overseen by natural persons during the period in which they are in use, with human oversight aiming to prevent or minimize risks to health, safety or fundamental rights. HITL platforms enable this by routing AI outputs to appropriately qualified personnel, ensuring that decisions receive the level of human oversight they require, and creating clear accountability chains for AI-assisted work.

Security and access controls that protect against misuse. Operational control extends beyond governance to security, ensuring that AI capabilities are available only to authorized personnel, that usage is logged and auditable, and that sensitive AI interactions are protected from unauthorized access. This security dimension becomes increasingly important as AI systems handle more critical business functions and as regulatory scrutiny intensifies.

The control layer transforms AI from an unpredictable tool into a governed capability. It provides organizations with the confidence that AI is being used responsibly, that humans remain in control of critical decisions, and that policies are enforced consistently across the enterprise. The business case for operational control is compelling: as detailed in Velatir's analysis of AI control ROI, preventing a single governance incident or enabling safe AI adoption in one critical business process can justify years of investment in control infrastructure.

The Governance and Control Matrix: Why Both Are Essential

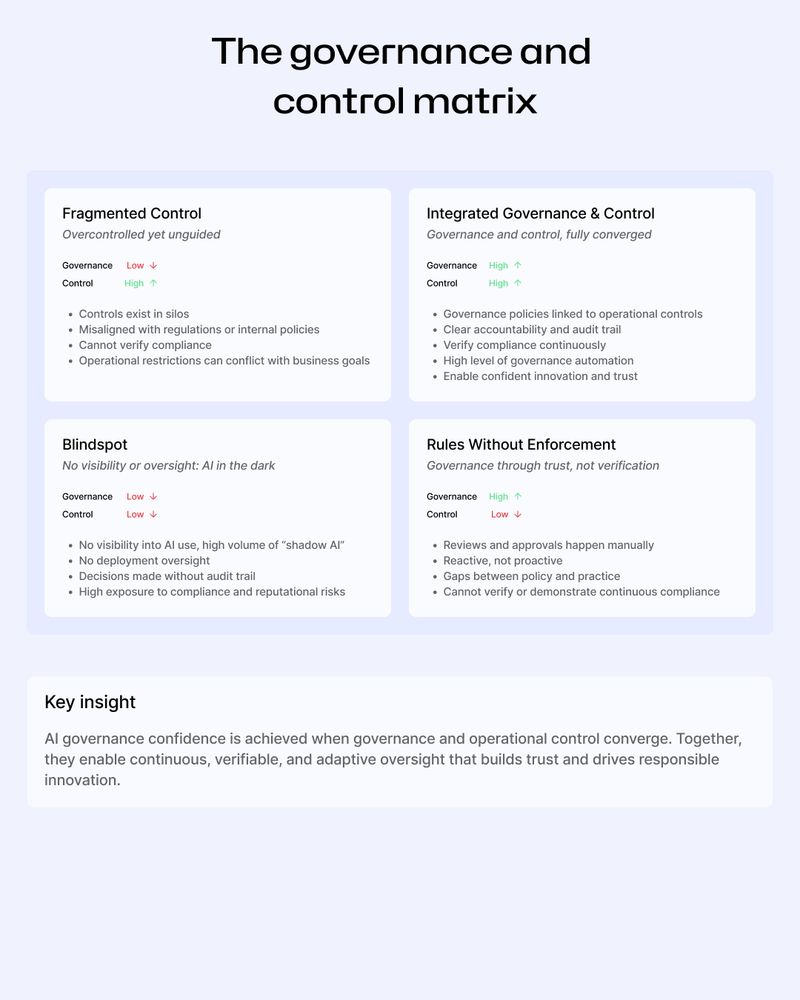

Organizations often ask whether governance or control is more important. This question misunderstands the nature of effective AI governance. Governance and control are not alternatives but complementary capabilities that together create comprehensive AI governance.

Consider a simple two-by-two matrix.

On one axis, governance capability: can the organization identify where AI is being used, and detect what risks it introduces and which controls are required?

On the other axis, control capability: can the organization enforce and monitor those controls to ensure AI is used in alignment with policies?

The EU AI Act Lens: AI Governance as Fiduciary Duty

The EU AI Act represents more than regulatory compliance; it signals a fundamental shift in how corporate governance must approach AI. The Act's human-centric approach and emphasis on oversight creates new responsibilities for boards and executives that parallel traditional fiduciary duties.

Fiduciary duties require directors to act in good faith, with appropriate care, and in the interests of stakeholders. Directors must consider long-term consequences for the company and its reputation, the potential impact on employees, suppliers, customers, and the local community. When AI systems make or influence decisions affecting these stakeholders, directors cannot claim ignorance about how those systems operate or whether they're properly governed.

The AI Act's requirements for human oversight, transparency, and accountability effectively translate these fiduciary principles into technical and operational mandates. Article 14 requires that high-risk AI systems enable natural persons to properly understand relevant capacities and limitations, remain aware of possible automation bias, correctly interpret system outputs, and decide when not to use or to override the system. These aren't just technical specifications but governance obligations that demand board-level attention.

The Tromsø case offers a stark illustration. When AI-generated content containing fabricated sources appeared in official documents, it wasn't just a technical failure or a training gap. It represented a governance failure at the highest level: systems that allowed consequential decisions to be made without proper oversight, controls that could be bypassed with a simple instruction to skip quality control, and leadership that treated AI as an oracle rather than a tool requiring human judgment.

The Practical Board AI Checklist

Boards and executives need concrete ways to assess their organization's AI governance posture. This checklist provides a framework for board-level discussions about AI governance and control capabilities:

Organizations that cannot answer these questions affirmatively face significant exposure. Organizations that can answer them position themselves ahead of the compliance curve and demonstrate the kind of responsible AI governance that builds stakeholder trust.

The Path Forward: Building Governance That Enables Innovation

The mixture of regulatory requirements, board-level accountability, and public scrutiny has transformed AI governance from an optional best practice to a business critical and organizations should no longer deploy AI tools without robust frameworks for detection and control.

The Tromsø incident offers lessons that extend far beyond Norwegian municipalities. When organizations lack visibility into AI usage and controls over AI deployment, the results are predictable: fabricated information making it into consequential documents, reputational damage that takes years to repair, regulatory scrutiny that extends across the enterprise, and a cautionary tale that competitors use to position themselves as more responsible alternatives.

Why the Governance and Control Framework Matters

At Velatir and Saidot, we've believe that effective AI governance requires two distinct but complementary capabilities that most organizations currently lack. This isn't theoretical speculation. We've worked with enterprises across financial services, healthcare, public sector, and technology to understand where AI governance fails and what it takes to succeed.

What we've learned is that organizations consistently underestimate the complexity of AI governance. Many organizations are still operating in a blind spot where AI-specific risks are not recognized or acted on. Organization aren’t used to operating in an environment, where AI is built using third-party components and require significant third-party risk management alike.

Organizations assume policies will be followed, that training will be remembered, that employees will exercise good judgment under pressure. Reality proves otherwise. Even well-intentioned professionals, when offered an expedient path through AI assistance, will take shortcuts that undermine governance objectives.

This is why we advocate for the governance and control framework. It's not simply about regulatory compliance, though it certainly achieves that. It's about building governance infrastructure that works with human nature rather than against it, that makes responsible AI use the path of least resistance, and that creates accountability through visibility rather than through hope.

Saidot's operational governance layer addresses the visibility challenge that undermines most AI governance efforts. Organizations cannot manage what they cannot measure. By providing comprehensive capabilities across AI deployments, Saidot enables organizations to answer the questions regulators increasingly ask: Where is AI being used? What risks does our AI pose to your organisation and stakeholders? How do you verify compliance with disclosure obligations? Without these capabilities, those questions have no good answers. With them, organizations transform uncertainty into evidence-based governance.

Velatir's operational control layer addresses the enforcement challenge that turns policies from paper tigers to operational safeguards. Governance frameworks fail when they depend entirely on human discretion, when they can be bypassed under deadline pressure, when they create friction that employees route around. By embedding Velatir directly into AI workflows, it is ensured that governance policies aren't aspirational but operational. The system enforces what the organization requires, creating accountability through technical controls rather than through trust alone.

Together, these capabilities answer the fundamental governance question boards must address: Can we use AI responsibly while maintaining accountability, building stakeholder trust, and satisfying regulatory requirements? Organizations with mature governance and control capabilities can answer yes with evidence. Organizations without them face an uncomfortable choice: limit AI adoption to avoid risk, or proceed with blind, ungoverned AI deployment and accept the consequences.

What This Means for Your Organization

If you're reading this as a board director, chief legal officer, chief compliance officer, or chief risk officer, you likely recognize elements of your own organization in this discussion. Perhaps you've deployed AI tools without fully understanding usage patterns. Perhaps you have policies about AI use but lack technical enforcement. Perhaps you're concerned about the EU AI Act August 2026 compliance deadlines but uncertain where to start.

The encouraging news is that building comprehensive AI governance is achievable, and organizations that start now gain significant advantages over those that delay. The governance and control framework provides a clear roadmap: establish visibility into current AI usage, identify risks for AI and its components, implement technical controls that enforce governance policies, create audit trails that demonstrate compliance, and build the organizational capability to govern AI at scale.

The Tromsø case demonstrates where hope-based AI governance leads. The EU AI Act establishes where regulation is heading. Forward-looking organizations recognize that the combination of governance and control isn't just about avoiding scandals or satisfying regulators. It's about building the trust required to innovate with confidence, about demonstrating to customers and stakeholders that AI enhances rather than replaces human judgment, and about positioning AI governance as a competitive differentiator rather than a compliance burden.

The organizations that will lead their industries in the AI era aren't necessarily those with the most advanced AI capabilities. They're the organizations that can deploy AI responsibly, prove they're doing so, and build the stakeholder trust that enables continued innovation. This is the promise of comprehensive AI governance: not limiting what AI can do, but ensuring AI does it right.

This is the work we do at Velatir and Saidot, and we welcome the opportunity to discuss how the detection and control framework can strengthen your organization's AI governance posture.

Ready to build comprehensive AI governance?

Learn how Velatir and Saidot can help your organization meet EU AI Act requirements while enabling confident AI adoption through the combination of operational control and operational governance.

Velatir | Control your AI – everywhere. Contact Velatir

Saidot | Graph-based AI governance Contact Saidot