Why Even Cyber Professionals Need AI Guardrails

The acting director of CISA uploaded sensitive contracting documents into public ChatGPT last August. The uploads triggered multiple security alerts within the first week. The documents were marked for official use only.

This happened at the agency responsible for federal cybersecurity.

Public ChatGPT sends user inputs to OpenAI for model training. The data becomes part of a system accessed by over 700 million users. It can inform responses to anyone, including adversaries.

The director had obtained special permission to use ChatGPT. Other DHS staff remained blocked from the tool. The permission was described as short-term and limited. The usage exceeded those boundaries.

An internal review followed. The outcome remains undisclosed.

What This Reveals About Shadow AI

People use AI tools because the tools are useful. This applies equally to senior leadership and frontline staff. Usefulness drives adoption faster than policy can keep up.

The CISA case demonstrates a pattern. Someone with authority requested access. Access was granted under specific conditions. Those conditions were not maintained. Security systems detected the problem after the fact.

Detection after upload means the data already left your control.

Why Blocking Fails

Organisations often respond to AI risk by blocking tools. DHS blocked ChatGPT for most staff. The acting director obtained an exception.

Blocking creates two problems. First, it pushes usage into personal accounts and unmonitored channels. Second, it concentrates risk with those who can override the block.

You need visibility into usage. Blocking removes that visibility.

The Visibility Problem

Most organisations discover shadow AI usage the same way CISA did. An alert fires after sensitive data has already been transmitted. By that point, the question shifts from prevention to damage assessment.

Data sent to public AI services cannot be recalled. It enters training pipelines. It informs future model responses. It may appear in outputs to other users.

Organisations need to know which AI tools are in use before data leaves their environment. This requires continuous discovery across your network. It requires seeing usage, including exceptions and personal accounts.

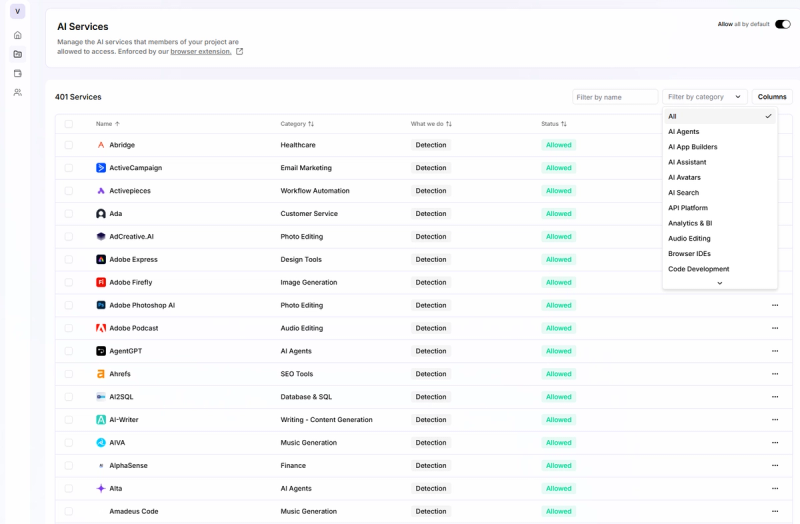

The challenge extends beyond familiar names like ChatGPT or Claude. Over 5,000 AI services exist today. New ones launch weekly. Your staff will find and use whichever tools solve their immediate problems.

What Governance Actually Looks Like

AI governance starts with visibility. You map which tools are in use, who is using them, and what data they are accessing.

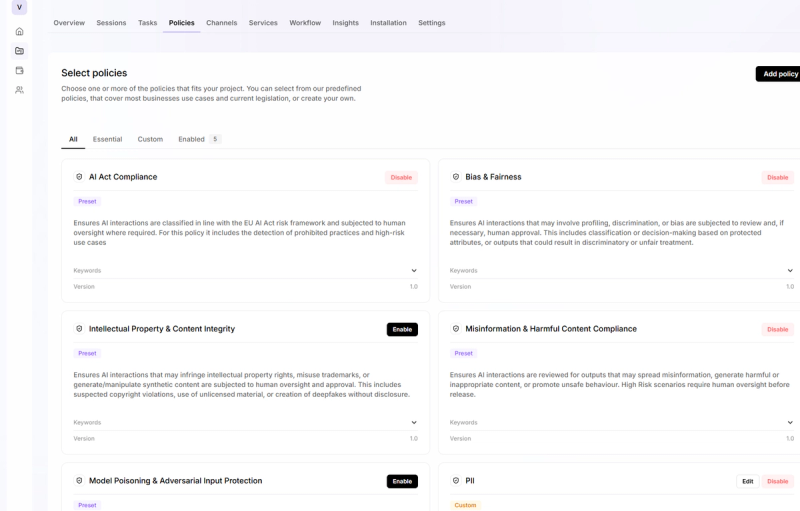

Then you apply controls based on risk. Some uses carry minimal exposure. Others involve regulated data or proprietary information. The controls should match the context.

You need to decide which AI uses are acceptable and which carry too much risk. You need to see when someone uploads a document to public ChatGPT. You need the ability to prevent that upload if it contains sensitive content. You need to allow other AI usage to continue.

These decisions require two capabilities. First, discovery to see which AI tools are in use. Second, enforcement to apply your decisions across the organisation.

How Discovery Works

Every interaction with an AI service creates a trace. Someone sends a prompt. Someone uploads a file. Someone receives a response. These interactions cross your network boundary.

Velatir captures these traces as they happen. Each trace gets evaluated against your policies. The system identifies which AI service is being used, what data is being transmitted, and who initiated the interaction.

This happens before the data reaches the external service. You see the interaction at decision time, when you can still intervene.

Discovery runs continuously across your network. It tracks over 5,000 AI services. When a new service appears in your environment, it gets identified and categorised by risk level.

Smart Guardrails Over Binary Blocking

Most organisations face a choice between allowing all AI usage or blocking it entirely. This creates problems either way.

Smart guardrails give you a third option. You define policies that match your risk tolerance. The system enforces those policies at the point of interaction.

Someone attempts to paste customer data into ChatGPT. The guardrail checks your policy. If customer data is restricted, the action gets blocked. The user sees why. They can proceed with a different approach that complies with policy.

Someone uses Claude to draft a marketing email. The guardrail checks the interaction. Marketing emails carry low risk. The action proceeds. No friction.

The same tool. Two different uses. Two different risk levels. Two different outcomes based on your policy.

This allows you to set company-wide rules that reflect how you think about AI risk. Block high-risk actions. Allow low-risk ones. Create accountability for everything in between.

When Blocking Makes Sense

Some situations require complete blocking. You may need to block certain high-risk AI services entirely across your organisation. You may need to prevent specific departments from accessing external AI tools.

Velatir supports both approaches. You can block individual services from the catalogue of 5,000 tracked AI tools. You can apply blanket blocks to categories of services. You can set different rules for different parts of your organisation.

Blocking becomes one option among many. You choose when to use it based on risk level and business need.

Enforcement That Scales

Policies only work if they apply consistently. A rule that exists in a handbook carries no weight if people can ignore it.

Velatir enforces your policies across your organisation. When someone interacts with an AI service, the system evaluates that interaction against your rules. High-risk actions get blocked. Borderline cases can require approval. Low-risk usage continues.

You maintain the ability to block specific services entirely when that makes sense. You also maintain the ability to allow services while restricting what data can be shared with them.

Your enforcement decisions become technical guardrails. People see the boundaries in real time. They understand what they can and cannot do before they attempt it.

Someone must own the consequences when data leaves your organisation. Someone must decide where the acceptable risk boundary sits.

The CISA incident happened because a human made a series of decisions. Request access. Upload documents. Continue past the authorised period. Each decision carried risk.

Governance systems should make those risks visible at decision time. They should require choices about sensitive data. They should create accountability for outcomes.

When someone attempts to upload a document to an external AI service, they see a choice. Proceed with the upload or cancel the action. The system logs the decision either way.

Moving Forward

You already have AI usage in your organisation. The question is whether you can see it and whether you have controls in place.

Shadow AI exists because people need to work efficiently. You need to make that usage visible, apply proportional controls, and maintain human accountability for data decisions.

Governance enables adoption while preserving control.

Sources: